Open Source can Make AI Trustworthy: Let’s Build Explainable AI Together

By Dr. Chris Hazard, CTO and co-founder of Howso

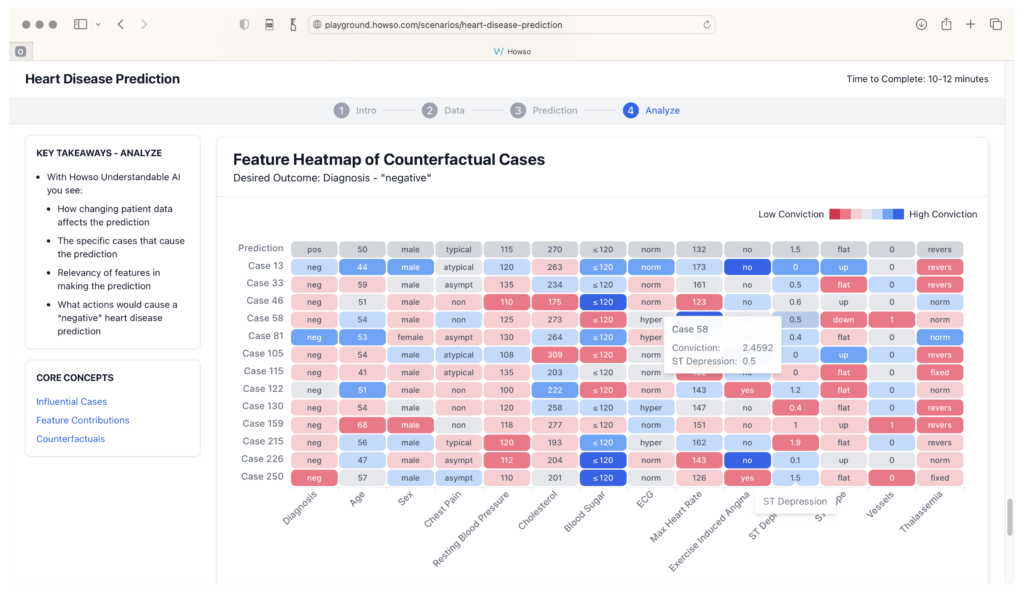

The Howso Engine in action

What’s one thing we can all agree on when it comes to AI? It’s not very trustworthy. Most people feel AI is not ready to make critical decisions about our personal and collective lives–because it messes up so often and we have no idea why. A query goes into a black box and an answer comes out, but we can’t see what data or reasoning the AI used to provide that answer. Often, the AI spits out correct information, and sometimes it is dangerously wrong.

Too many of us have begun to accept the faulty notion that black-box AI is inevitable. That we must sacrifice integrity for performance. At Howso, we disagree. We’re building AI without compromise and open sourcing it so the world can join us.

Howso’s goal is to make AI trustworthy for everyone. It’s a bold ojbective, but we’re confident we can do it with the support of the open-source community. Last week, we announced our open-source AI engine on GitHub to allow data scientists, developers, academics, researchers, and students to build fully explainable AI apps and models. We’ve been developing our explainable AI engine for over a decade, and commercializing it since 2017. But we have made the bold choice to become an open-source company. Why? Because empowering the open-source community with powerful tools is the best way to ensure AI becomes safer and more transparent.

What exactly is Howso Engine and how can the open-source community use it? Howso Engine is a fully auditable ML framework that offers a powerful alternative to black-box AI libraries such as PyTorch and JAX. Most of these black-box AI platforms are built on a decades-old technology called “neural networks.” AI models built on neural networks are abstract representations of the vast amounts of data on which they are trained; they are not directly connected to training data. Thus, black-box AIs infer and extrapolate based on what they believe to be the most likely answer, based on the model, not the actual data.

Howso Engine, on the other hand, is built on instance-based learning, which is fully explainable. With IBL, every prediction, label, and generative output can be perfectly attributed back to the relevant data used for constructing the output. This allows users to audit and interrogate outcomes and intervene to correct mistakes and bias. This all works because IBL stores training data (“instances”) in memory. Howso Engine, aligned with the principles of “nearest neighbors” but powered by information theory, makes predictions about new instances given the likelihood of their relationships to existing instances. We hope data scientists, ML engineers, and developers will use the free open-source Howso Engin to create powerful AI models and apps that achieve state-of-the-art transparency and interpretability. Because if you can’t trust AI, what real use is it?

The potential for explainable AI is clear. Companies and governments could use IBL AI to meet security, regulatory, and compliance standards. IBL AI will also be particularly useful for any applications where bias allegations are rampant, such as hiring, college admissions, legal cases, and so on. We can’t wait to see what the open-source community builds with Howso Engine!

Howso at ATO

Monday, October 16 at 3:45 pm ET

Business Track

Most “Open Source” AI Isn’t. And What We Can Do About That

Speaker: Dr. Chris Hazard

Tuesday, October 17 at 12:45 pm ET

Featured Lunch Talk • Machine Learning/AI track

Are Privacy and Intellectual Property Still Relevant? Perspectives From History, Biology, Math, and, of Course, AI

Speaker: Dr. Chris Hazard

Also, stop by our Booth #73/74 to meet the Howso team.

The Featured Blog Posts series highlights posts from partners and members of the All Things Open community leading up to ATO 2023.